When 12.2 came out most of the (optimizer) focus was around SPD and how to avoid the challenges from 12.1. Still 12.2 introduced several (less acclaimed) optimizations including “Bushy Join” transformation, which is interesting since (I think, corrections welcome) Bushy Join concept isn’t necessarily tied to query transformation in general, especially before 12.2 (some reference about “manual” bushy joins here and here) or in other RDBMS (a manual example on SQL Server here).

Anyway being the CBO way of improving our code query transformations here we go again.

There isn’t much on the internet about Bushy Joins and 12.2 beside this article so I decided to take a closer look. All the tests are from a 12.2 vanilla installation with bushy joins enabled

SQL> @hparam bushy

NAME DESCRIPTION SESSION_VA

--------------------------------- ----------------------------------- ----------

_optimizer_bushy_cost_factor cost factor for bushy join 100

_optimizer_bushy_fact_dim_ratio bushy join dimension to fact ratio 20

_optimizer_bushy_fact_min_size minimumm fact size for bushy join 100000

_optimizer_bushy_join enables bushy join ON

and the DDL to create the objects are the following

create table f1 as select a.* from dba_objects a, (select 1 from dual connect by rownum <= 2);

create table d1 as select object_id, object_type from dba_objects;

create table f2 as select a.* from dba_objects a, (select 1 from dual connect by rownum <= 2);

create table d2 as select object_id, object_type from dba_objects;

exec dbms_stats.gather_table_stats(user,'F1');

exec dbms_stats.gather_table_stats(user,'D1');

exec dbms_stats.gather_table_stats(user,'D2');

exec dbms_stats.gather_table_stats(user,'F2');

create index f1_idx1 on f1(object_id);

create index f2_idx1 on f2(object_id);

create index f1_idx2 on f1(object_type);

create index f2_idx2 on f2(object_type);

select table_name, num_rows from user_tables where table_name like 'F_' or table_name like 'D_';

TABLE_NAME NUM_ROWS

------------- ----------

F1 147200

D1 73601

F2 147204

D2 73603

The DUAL to duplicate the number of rows in DBA_OBJECTS is just to have more than 100k rows in the two fact tables F1 and F2 (also indexes *IDX1 are never used in my examples but I created them so in the spirit of full disclosure I included them).

select f1.*, f2.*

from f1, f2, d1, d2

where f1.object_type = f2.object_type

and d1.object_type = f1.object_type

and f2.object_type = d2.object_type

and d1.object_id = 123

and d2.object_id = 456;

---------------------------------------------------------------------

| Id |Operation |Name | Rows | Cost|

---------------------------------------------------------------------

| 0|SELECT STATEMENT | | 208K| 414|

|* 1| HASH JOIN | | 208K| 414|

| 2| NESTED LOOPS | | 3132 | 207|

| 3| NESTED LOOPS | | 3132 | 207|

|* 4| TABLE ACCESS FULL |D1 | 1 | 58|

|* 5| INDEX RANGE SCAN |F1_IDX2 | 3132 | 9|

| 6| TABLE ACCESS BY INDEX ROWID |F1 | 3132 | 148|

| 7| VIEW |VW_BUSHY_A9E4AA31| 3132 | 207|

| 8| NESTED LOOPS | | 3132 | 207|

| 9| NESTED LOOPS | | 3132 | 207|

|* 10| TABLE ACCESS FULL |D2 | 1 | 58|

|* 11| INDEX RANGE SCAN |F2_IDX2 | 3132 | 9|

| 12| TABLE ACCESS BY INDEX ROWID|F2 | 3132 | 148|

---------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

1 - access("F1"."OBJECT_TYPE"="ITEM_1")

4 - filter("D1"."OBJECT_ID"=123)

5 - access("D1"."OBJECT_TYPE"="F1"."OBJECT_TYPE")

10 - filter("D2"."OBJECT_ID"=456)

11 - access("F2"."OBJECT_TYPE"="D2"."OBJECT_TYPE")

From the execution plan D1 and F1 are joined together first and the result is then joined with [the result of the join between D2 and F2].

In this case this is a good idea since the two large fact tables are joined together without any dimension in between, thus the filtering introduced by the two dimensions cannot be applied before a large (and filtered) fact table is joined with another large (and not filtered) fact table. Btw what I just said is a bit incorrect since a merge join cartesian between the two dimensions wouldn’t be a too bad idea in this case (it’s actually what the CBO does once Bushy Joins are disabled).

Let’s take a look under the hood, aka 10053 trace 🙂

As mentioned before Bushy Joins is a CBO transformation, plugged into CBQT framework.

For some more details about the whole transformation stuff you can look here

(Notice I skipped a few lines in the output just to make it shorter, the skipped content was either blank or not important here)

The first interesting bit is the query block is copied (1066), it’s nothing new but it’s worth noticing. Also interesting are lines 1074 and 1075 with the same message and a bit of grammar puzzle.

1064 BJ: Checking validity for bushy join for query block SEL$1 (#1)

1066 Registered qb: SEL$1 0x22686940 (COPY SEL$1)

1071 ****************************************

1072 Cost-Based Bushy Join

1073 ****************************************

1074 BJ: Checking validity of bushy join for query block SEL$1 (#1)

1075 BJ: Checking validity for bushy join for query block SEL$1 (#1)

In this case a linear search type (details here) is used for the Bushy Joins, starting from the transformation NOT applied. Notice all the four tables are involved at this point (0,0,0,0) and they don’t seem to be “qualified” yet, also this is a bit different than usual since the 4 placeholder here seem to be for tables and not for query blocks (like in other transformations, for example subquery unnesting).

The interesting part is at the end of the costing (physical optimizer) the tables are “identified” as dimensions and facts, including validating stats and structure (the “sructure” typo isn’t mine 😛 ).

1082 BJ: Starting iteration 1, state space = (0,0,0,0) : (0,0,0,0)

1083 BJ: Original query

(physical optimizer here)

3787 BJ: fact stats valid: F2 [F2]

3788 BJ: dim stats valid: D2 [D2]

3789 BJ: dim structure valid: D2 [D2]

3790 BJ: fact sructure valid: F2 [F2]

3791 BJ: fact stats valid: F1 [F1]

3792 BJ: dim stats valid: D1 [D1]

3793 BJ: dim structure valid: D1 [D1]

3794 BJ: fact sructure valid: F1 [F1]

3798 BJ: Updated best state, Cost = 1063.107718

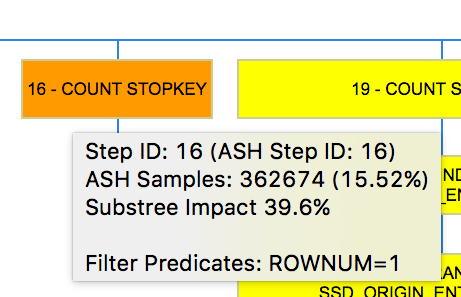

At this point something interesting happens, the CBQT framework (my guess here, easily wrong) starts to focus only on a subset of the objects to consider for the search space / transformation, notice the (4,3).

I couldn’treliably match those 4 and 3 with something in the 10053 (they aren’t query block numbers since there is only one starting query block SEL$1) but an educated guess is 4 is the “substree D1,F1” while 3 is “substree D2,F2”.

3799 BJ: Starting iteration 2, state space = (4,3) : (0,1)

3800 Registered qb: SEL$A9E4AA31 0x22425a70 (QUERY BLOCK TABLES CHANGED SEL$1)

3804 signature (): qb_name=SEL$A9E4AA31 nbfros=3 flg=0

3805 fro(0): flg=0 objn=83772 hint_alias="D1"@"SEL$1"

3806 fro(1): flg=0 objn=83771 hint_alias="F1"@"SEL$1"

3807 fro(2): flg=5 objn=0 hint_alias="VW_BUSHY_A9E4AA31"@"SEL$A9E4AA31"

3808

3809 Registered qb: SEL$9F959E4D 0x22420780 (SPLIT/MERGE QUERY BLOCKS SEL$A9E4AA31)

3813 signature (): qb_name=SEL$9F959E4D nbfros=2 flg=0

3814 fro(0): flg=0 objn=83774 hint_alias="D2"@"SEL$1"

3815 fro(1): flg=0 objn=83773 hint_alias="F2"@"SEL$1"

3816

3817 Registered qb: SEL$F04E7C56 0x22425a70 (QUERY BLOCK HAS BUSHY JOIN SEL$1; SEL$1; LIST)

3821 signature (): qb_name=SEL$F04E7C56 nbfros=3 flg=0

3822 fro(0): flg=0 objn=83772 hint_alias="D1"@"SEL$1"

3823 fro(1): flg=0 objn=83771 hint_alias="F1"@"SEL$1"

3824 fro(2): flg=1 objn=0 hint_alias="VW_BUSHY_A9E4AA31"@"SEL$A9E4AA31"

and the transformed SQL becomes (after reintroducind the crappy “select *” I have in my SQL and doing a little formatting), which is nothing surprising if you looked at the previous linked articles.

3828 SELECT *

FROM (SELECT *

FROM "MPAGANO"."F2" "F2",

"MPAGANO"."D2" "D2"

WHERE "D2"."OBJECT_ID"=456

AND "F2"."OBJECT_TYPE"="D2"."OBJECT_TYPE") "VW_BUSHY_A9E4AA31",

"MPAGANO"."F1" "F1",

"MPAGANO"."D1" "D1"

WHERE "F1"."OBJECT_TYPE"="VW_BUSHY_A9E4AA31"."ITEM_1"

AND "D1"."OBJECT_TYPE"="F1"."OBJECT_TYPE"

AND "D1"."OBJECT_ID"=123

Then the classic approach of any other transformation under CBQT is used (how cool is that? 🙂 ), some more details on how to interpret all this is in the link provided above.

4617 BJ: Updated best state, Cost = 413.807609

4618 BJ: Starting iteration 3, state space = (4,3) : (1,0)

5430 BJ: Not update best state, Cost = 2148.874633

5431 BJ: Starting iteration 4, state space = (4,3) : (1,1)

6182 BJ: Not update best state, Cost = 560.862165

6183 BJ: transformed final query

So Bushy Join transformation will be applied, in details “grouping” together just F2 and D2 (that is (4,3) = (0,1)) since the cost was the lowest, 413.

Just for the sake of completeness here is the transformed SQL (formatted and injected “*” again to shorten it) when both “subtrees” go under Bush Joins

5458 SELECT *

FROM (SELECT *

FROM "MPAGANO"."F1" "F1",

"MPAGANO"."D1" "D1"

WHERE "D1"."OBJECT_ID"=123

AND "D1"."OBJECT_TYPE"="F1"."OBJECT_TYPE") "VW_BUSHY_B144F3C9",

(SELECT *

FROM "MPAGANO"."F2" "F2",

"MPAGANO"."D2" "D2"

WHERE "D2"."OBJECT_ID"=456

AND "F2"."OBJECT_TYPE"="D2"."OBJECT_TYPE") "VW_BUSHY_A9E4AA31"

WHERE "VW_BUSHY_B144F3C9"."ITEM_1"="VW_BUSHY_A9E4AA31"."ITEM_1"

Few other things worth mentioning below.

From the 10053 it seems Star Transformation is an interleaved transformation for Bushy Joins, even though in my case it was never considered, likely due to the simple nature of my SQL

The transformation can be controlled by the BUSHY_JOIN hint and the syntax is (surprisingly) pretty trivial for simple SQL statement. You can provide within parenthesis the grouping you want Oracle to apply as parameter of the hint. For example in order to force the transformation on both F2,D2 and F1,D1 I can use BUSHY_JOIN((d1 f1) (d2 f2)). Extended and more accurate syntax (including target query block as well as source query block for each table) would be better, but still this is much easier than write an CONCAT hint 🙂

There are several “heuristic” to make Bushy Joins considered, for example the size of the fact table (100k rows according to the parameters above, just guessing here), the number of tables the fact needs to be joined to (another 2 at least), the size ratio between dimensions and fact, etc. I don’t have a complete list but I assume the 10053 would list the reasoning for ignoring Bushy Joins for your SQL (as it did in my case when I looked into this transformation the first time).

There is a lot of guessing in this blog post so if anybody has any correction please let me know and I’ll be happy to make adjustments (and very happy to learn!).

I’m not sure why the feature is disable by default, maybe it’s not super-solid yet, but I think it can have some nice impact on specific cases.